Running a Neural Network on a 1984 Mac is Possible with Some Clever Workarounds

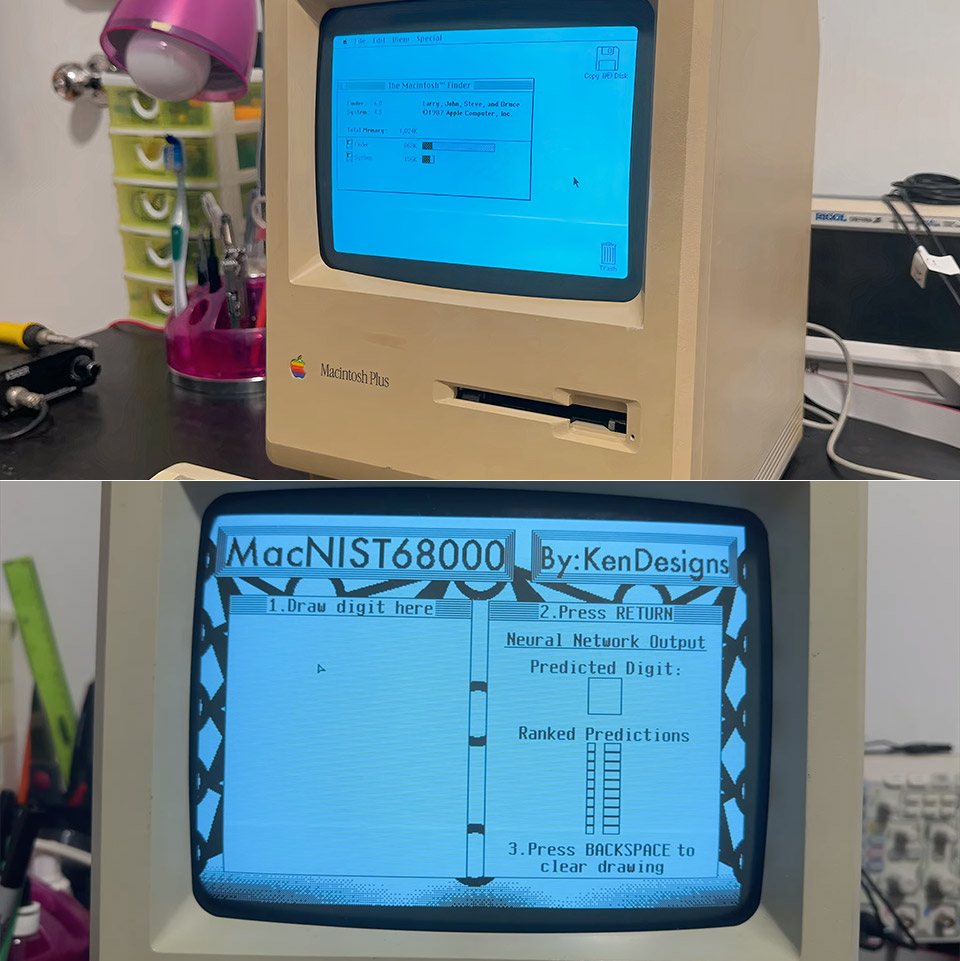

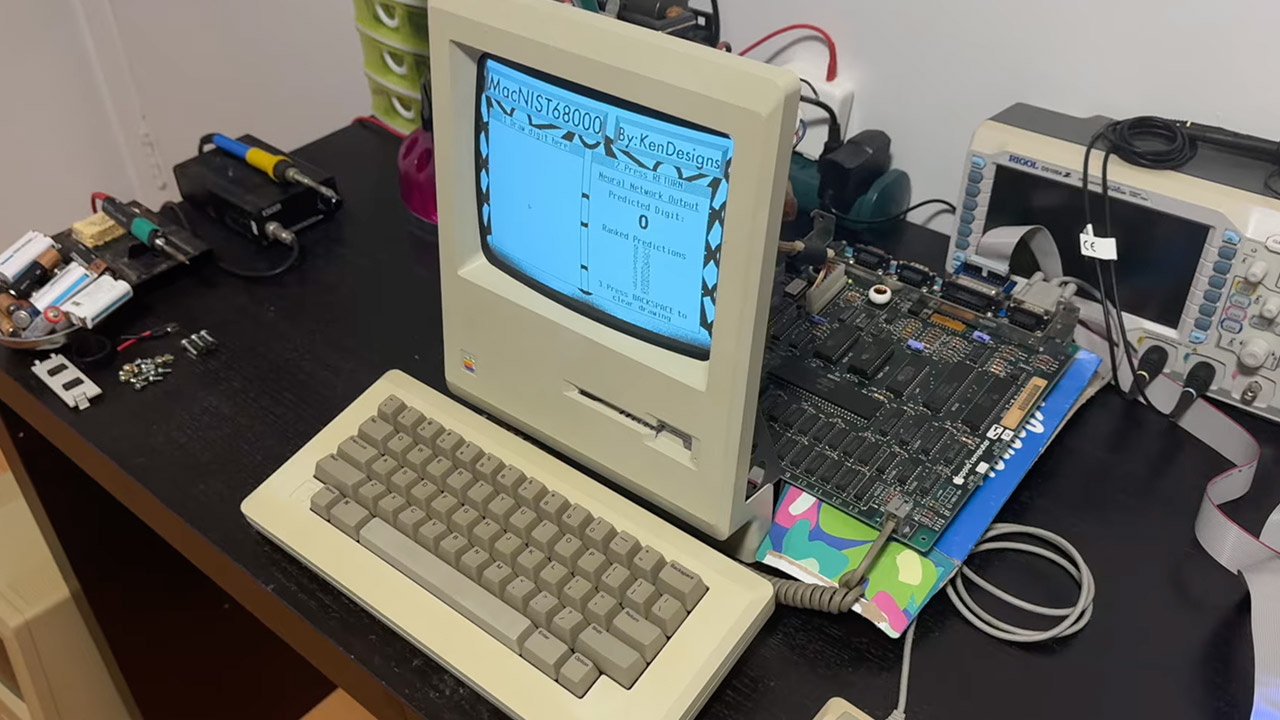

In a world where computers have terabytes of storage and processors with billions of transistors, a maker named KenDesigns has done something amazing: run a neural network on the original 1984 Macintosh, a machine so old it predates the internet.

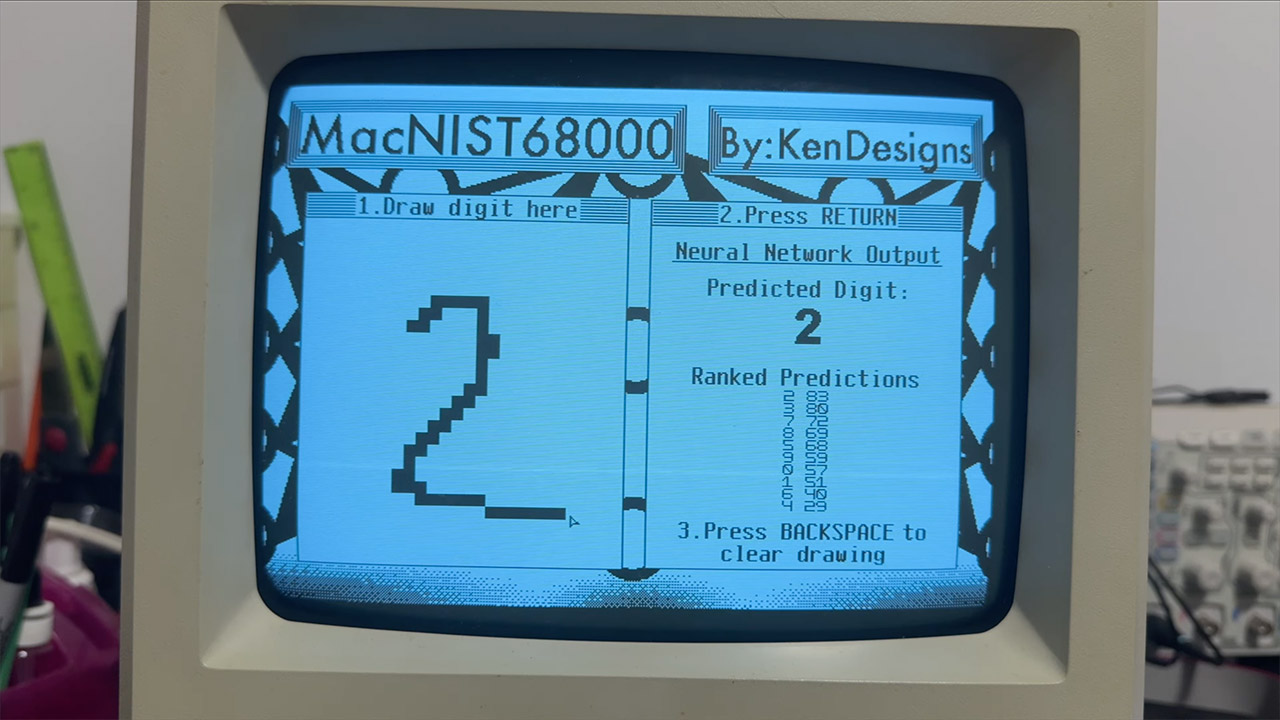

KenDesigns chose the MNIST dataset, a collection of handwritten digits used to train neural networks. This dataset, created in the 1990s, is 28×28 pixel images of numbers 0-9. Perfect for a simple yet functional neural network. The goal was to build a program that lets you draw a digit on the Mac’s screen, have the neural network analyze it and tell you what number it is. On modern hardware this is trivial, but the original Macintosh (specifically the Mac 128K with 128k of RAM and a Motorola 68000 processor) is a challenge. No floating point unit and memory so limited it can barely hold a single high res photo. The Mac seemed an unlikely candidate for AI. But KenDesigns found a way.

Sale

Apple 2025 MacBook Air 15-inch Laptop with M4 chip: Built for Apple Intelligence, 15.3-inch Liquid Retina…

- SPEED OF LIGHTNESS — MacBook Air with the M4 chip lets you blaze through work and play. With Apple Intelligence,* up to 18 hours of battery life,*…

- SUPERCHARGED BY M4 — The Apple M4 chip brings even more speed and fluidity to everything you do, like working between multiple apps, editing videos,…

- BUILT FOR APPLE INTELLIGENCE — Apple Intelligence is the personal intelligence system that helps you write, express yourself, and get things done…

The first challenge was memory, the Mac 128K’s 128k of RAM had to hold the whole neural network, including the weights, biases and the software itself. To put this into context, a single layer of a neural network with 32 nodes connected to a 28×28 pixel input image requires millions of parameters, each of which is normally recorded as a 32 bit floating point value. On a modern computer this is not a problem, but on a Mac with less RAM than a text message it’s a disaster. KenDesigns’ initial thought was to downsize the model, reduce the number of nodes in the hidden layer to fit in the RAM. This “just do it” approach worked, but was slow and wrong, taking nearly 10 seconds to process a single image. A smaller model sacrificed too much precision, often misidentifying digits. Something smarter was needed.

Enter quantization, a technique to compress neural networks without reducing their complexity. Weights in neural networks determine how one node affects another. These weights are typically floating point numbers with decimal components for precision but they take up a lot of memory. Quantization turns these weights into 8 bit integers which take up a fraction of the space. Instead of storing a weight of 0.3472 as a 32 bit float, quantization converts it to an integer between -128 and 127 along with a scaling factor and an offset to retain the range of values. This reduced memory usage and allowed a more complex model to fit in the Mac’s 128k of RAM. And it solved the Mac’s second major problem: no floating point unit.

The Motorola 68000 processor at 7.8 MHz can do integer math through its arithmetic logic unit but struggles with floating point. Without a floating point unit the Mac would generally rely on slow emulation to do decimal math and inference times would suffer. Quantization gets around this by doing the math directly on 8 bit integers. KenDesigns wisely didn’t convert to floating point during inference. The neural network can run on fast integer math by multiplying and adding the quantized values then compensating for scaling factors and offsets at the end. This resulted in predictions that seemed responsive with only a tiny drop in accuracy compared to a full floating point model.

Another breakthrough was to completely bypass the Mac operating system. Even when idle the original Macintosh system software takes up about 20k of RAM which is about 20% of the total memory on the Mac 128K. Add in the 22k for the screen frame buffer and the neural network would be starved for memory. KenDesigns solved this by creating a custom software development kit (SDK) that runs “bare metal” meaning without an operating system. This required creating a custom bootloader—a program that initializes the Mac and loads the neural network software. By removing the operating system KenDesigns was able to free up valuable RAM and the model and its interactive interface could coexist within the limits of the Mac.

[Source]

Running a Neural Network on a 1984 Mac is Possible with Some Clever Workarounds

#Running #Neural #Network #Mac #Clever #Workarounds